Pre(r)amble

This is part of a(n aspirationally) series of posts documenting some of the process of (building|cat herding an AI agent to build) an easily hosted Python teaching tool built with just front-end JS and a WASM port of MicroPython.

- You can find Part 1 here

- You can find Part 2 here

- This is Part 3!

- You can find Part 4 here

- You can find Part 5 here

- You can find Part 6 here

This week I was really excited about getting the Abstract Syntax Tree feedback and testing working, as well as just tightening up the user experience a bit, hiding knobs and dials when they weren’t used, etc. I’m getting close to the end of my initial feature list!

What we had before

See last week’s post for some of the details and screenshots.

- Unit testing for user code (stdin/stdout/sterr)

- Methods for loading different programming problem configurations

- Basic authoring page for configuring configurations

What’s happened since

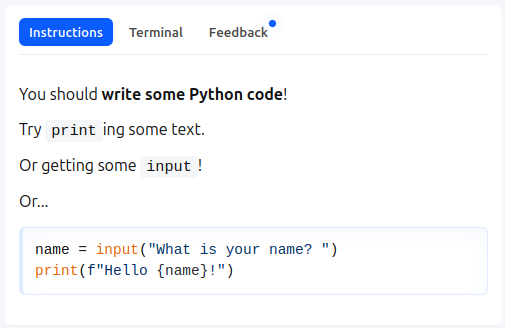

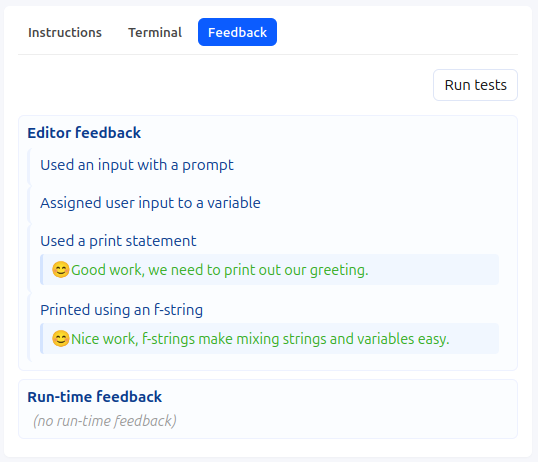

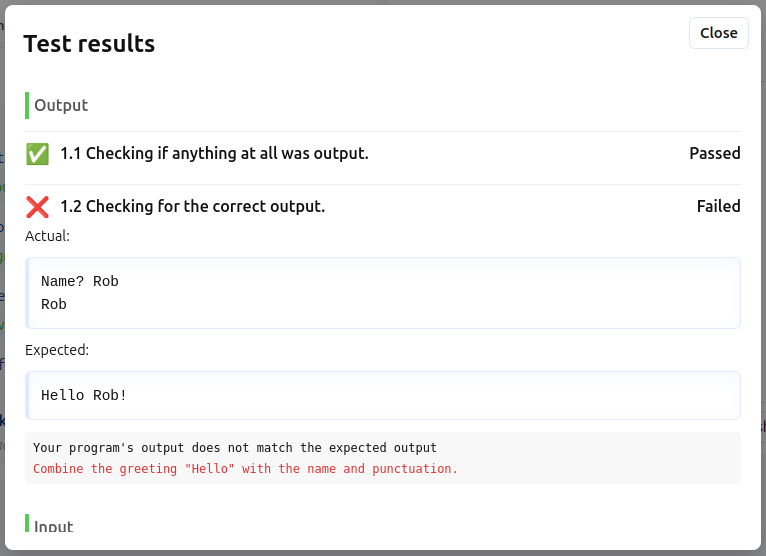

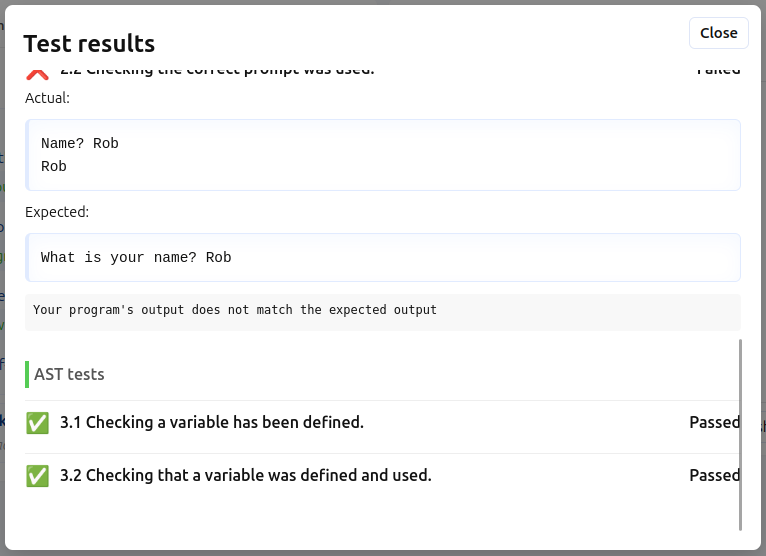

Here are some screenshots of the latest version with some of the new features.

-

01_feedback_indicator

01_feedback_indicator

-

02_user_code_ui

02_user_code_ui

-

03_editor_feedback

03_editor_feedback

-

04_input_tests

04_input_tests

-

05_ast_tests

05_ast_tests

-

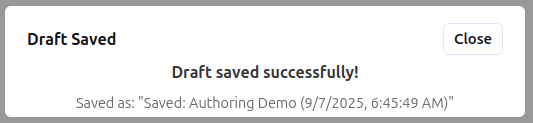

06_save_draft

06_save_draft

-

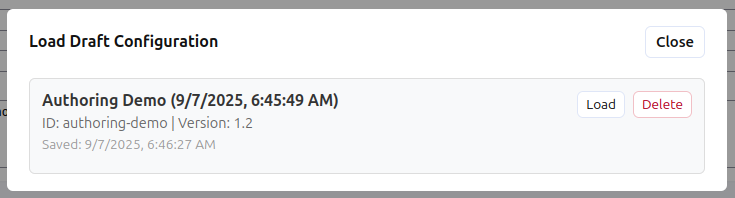

07_load_draft

07_load_draft

-

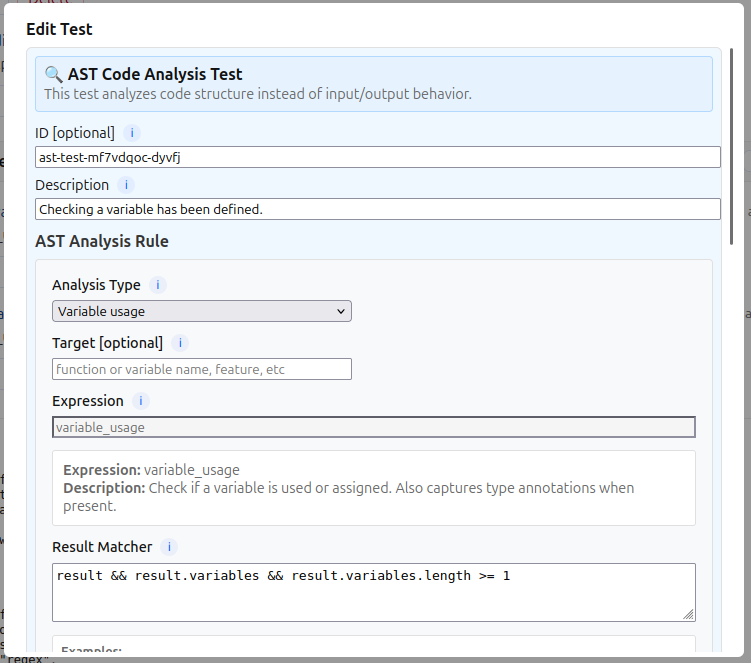

08_ast_builder_1

08_ast_builder_1

-

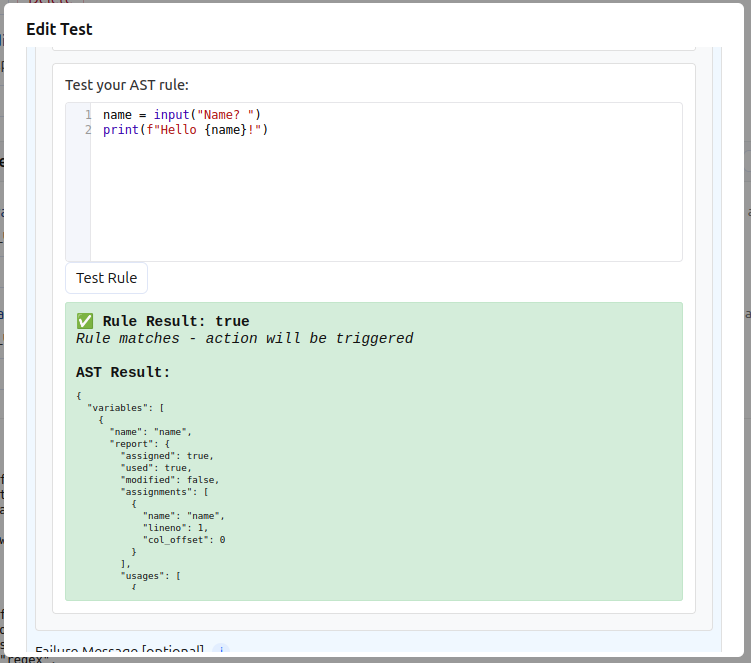

09_ast_builder_2

09_ast_builder_2

-

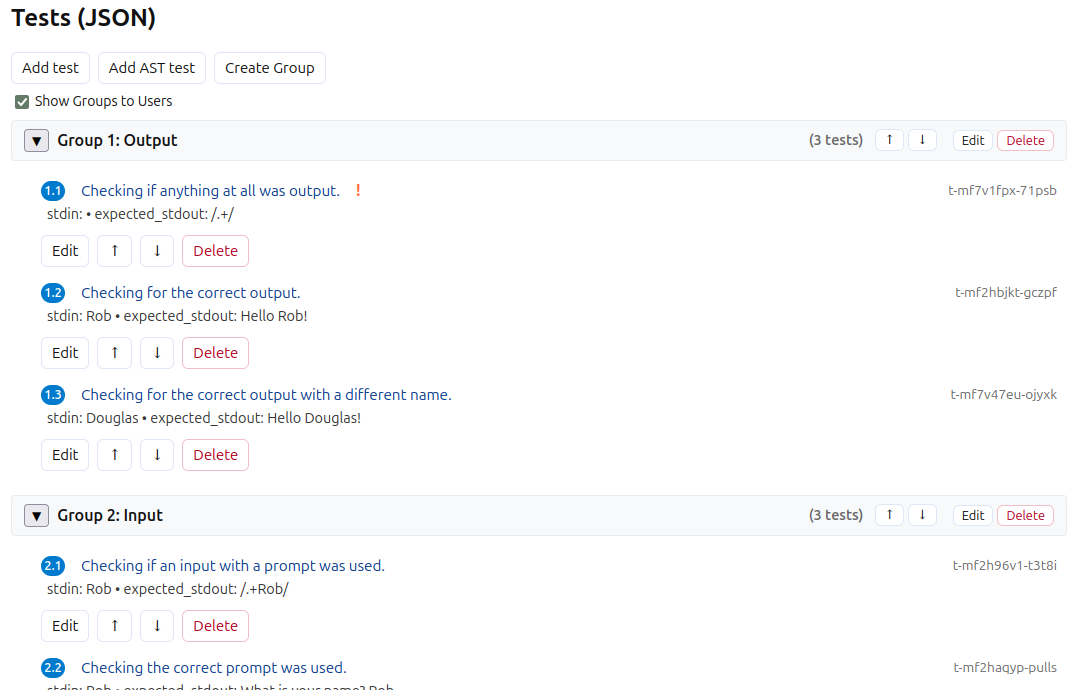

10_test_groups

10_test_groups

Abstract Syntax Tree feedback and tests

I had started playing around with Abstract Syntax Trees as a method for giving students feedback on their code in my old job, but since I wasn’t in an engineering role I didn’t have too much opportunity to implement them in anything other than limited scenarios. This project has been an opportunity to dig back into the topic and it’s been pretty fun!

The scenario author can now get reports on different types of language feature use in user code, interrogate the results (via a JS expression on the returned info), and provide feedback. Here are the different types of feedback available so far:

- Defined functions

- Variable usage

- Control structures

- Use of docstrings

- Defined classes and methods

- Import statements

- Magic numbers

- Exception handling

- Comprehensions

Most of them can be narrowed down by name (e.g. has a certain variable been defined, used, modified, used type hints etc) or provide a general overview of their use which the author can then write a test for (e.g. are there any recursive functions defined). Requiring the author to write a JS expression isn’t ideal since some of them are a bit clunky, but flexibility comes at a cost. When authors are writing an AST test or feedback item, they can provide some example Python code in the rule builder and run their rule against it in real time to check if it works.

Authoring workflow improvements

Authors can now save and load drafts using IndexedDB using the configuration ID and version. This makes it easier to develop and refine different scenario configs over time. The import and export to JSON file feature is still there too so configs can be stored somewhere other than the browser and loaded into a repo (or into the server config folder) when they’re ready for use.

I also tweaked the feedback and test builders so that only options that were relevant to

the feedback or test being built were active. For example stdout checks obviously can’t

happen at edit time, so those are hidden from the builder UI unless the context is run time.

Test groups and run conditions

Test groups have been added, as well as run conditions for both test groups and individual tests within those groups. This means that groups and tests can be set to either:

- Always run

- Run only if the preceding test or group of tests passed

In the example config that I put together this meant I could assemble three groups of tests:

- Input tests

- Output tests

- AST code analysis tests

Each one of the groups ran independently, but I could have the tests within each of the groups only run if the previous test in the group ran, providing scaffolded feedback about how the program was responding. For example, in the AST tests, if the user has not defined any variables we can provide targeted feedback. If they have defined a variable but not used it, different feedback.

User editing and running improvements

Apart from a few UI changes, I’ve made some QoL improvements to the user side of the app as well.

- Ctrl/Cmd-enter can be used to run code when the edito has focus

- A feedback activity indicator has been added to the tab to show when background feedback as appeared

- Added a download button to the code workspace so users can take their project and continue it elsewhere

(A)Introspection

I use Github Copilot and pay for the Pro tier, so have some number of “premium” requests per month. I tend to use Claude for the model, which is one of their “1x” models, and ran out requests towards the end of August, leaving me with a choice of GPT-4.1, GPT-4o, GPT-5 mini, and Grok Code Fast 1 (which I tried briefly and it was a complete disaster).

Running out of tokens was an interesting experience, since Claude is so damned good at implementing new features (even if the pseudo-personality is insufferable). Even after the month rolled over I found myself thinking more about some of the things that I had cheerfully just thrown at the LLM the previous month, and doing things myself, as well as model switching for different types of tasks. GPT-5 mini has generally been better about considered bug fixes than Claude (and is currently in the 0x free tier model list), so I would get Claude to kick off a set of features, and then when it got stuck, I’d switch to GPT-5 mini for the debugging and fixing.

I continue to be amazed and how good the models can be at rapidly implementing fairly significant new features. For example, the AST rules, building the analyzer, and wiring it into the app took maybe half an hour (with a few hours more of me refining what it’d done).

On the other hand they are so spectacularly bad at other things. For example last night I wanted to make the test code editing space in the AST rule builder a CodeMirror editor so the author could get syntax highlighting, indenting etc. The result was a messed up gutter and line numbers that appeared over the top of the code. Around an hour of trying to get the agent to fix things (both Claude and GPT-5 mini) I finally really read the code myself and I had to write one line of CSS.