Pre(r)amble

This is part of a(n aspirationally) series of posts documenting some of the process of (building|cat herding an AI agent to build) an easily hosted Python teaching tool built with just front-end JS and a WASM port of MicroPython.

- You can find Part 1 here

- You can find Part 2 here

- You can find Part 3 here

- You can find Part 4 here

- This is Part 5!

- You can find Part 6 here

I have a (somewhat) up to date version of this tool running on the site. Things might occasionally be broken, things might not work the same way as they did before.

There’s a changelog in the authoring interface to give you a bit of an idea of what’s new or different.

If you’ve used this before, you might need to do a hard refresh to clear out any cached JS or CSS. I should probably put some clearing of IDB storage in there too, just in case. Maybe next week.

What we had before

See last week’s post for some of the details and screenshots.

- Student verification codes (which are going to need some tweaks to the author side with the new problem list feature)

- Read-only file support in workspaces

- Per-test workspace files

What’s happened since

Here are some screenshots of the latest version with some of the new things from this week.

-

01_config_list_collapsed

01_config_list_collapsed

-

02_config_list_expanded

02_config_list_expanded

-

03_feedback_msg_optional

03_feedback_msg_optional

-

04_success_indicator_title

04_success_indicator_title

-

05_success_indicator_configlist

05_success_indicator_configlist

-

06_success_indicator_snapshot

06_success_indicator_snapshot

-

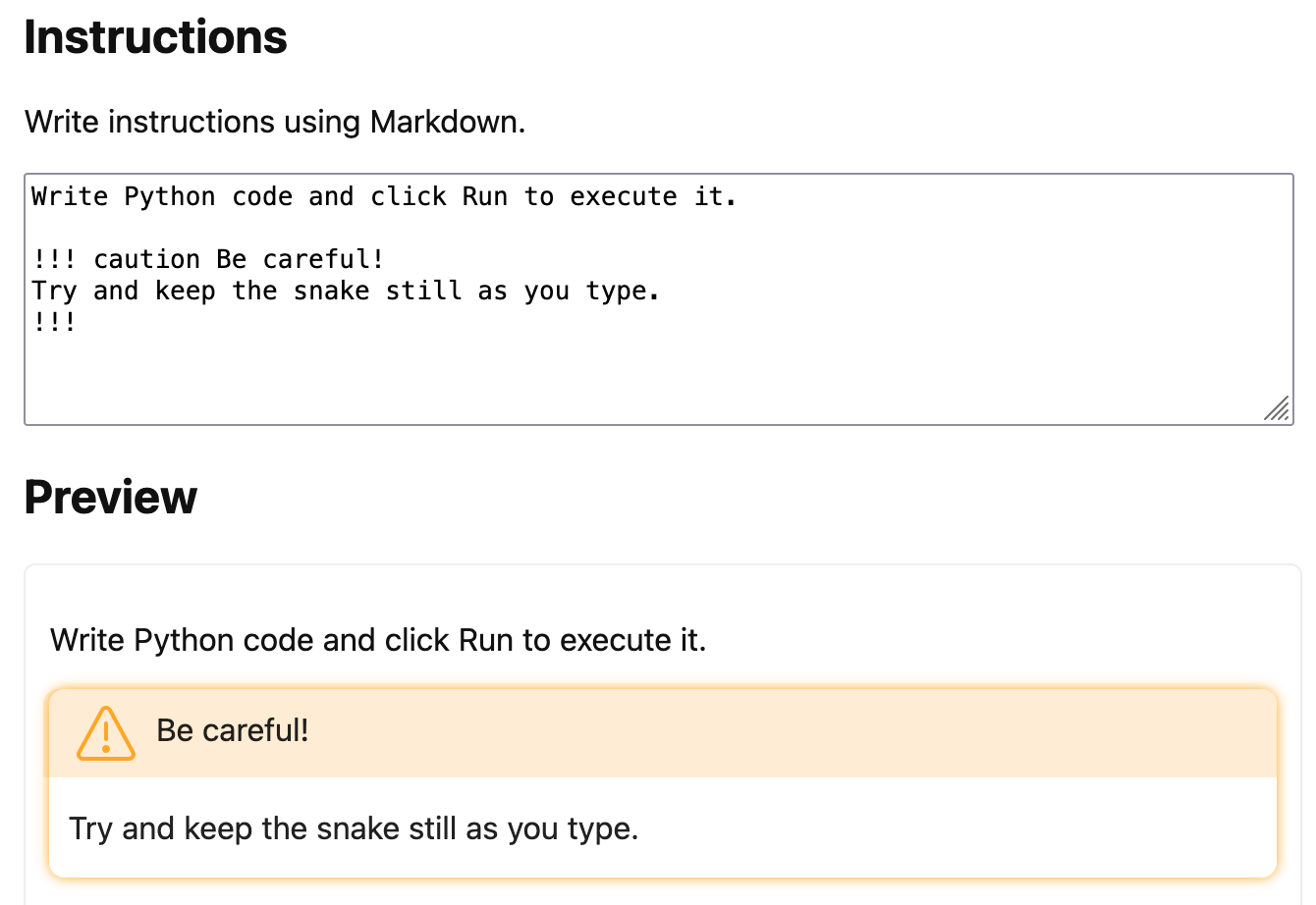

07_admonitions_markdown

07_admonitions_markdown

-

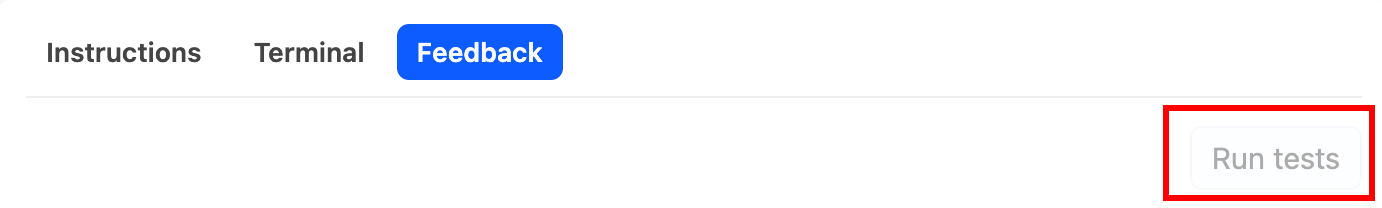

08_disabled_run_key

08_disabled_run_key

-

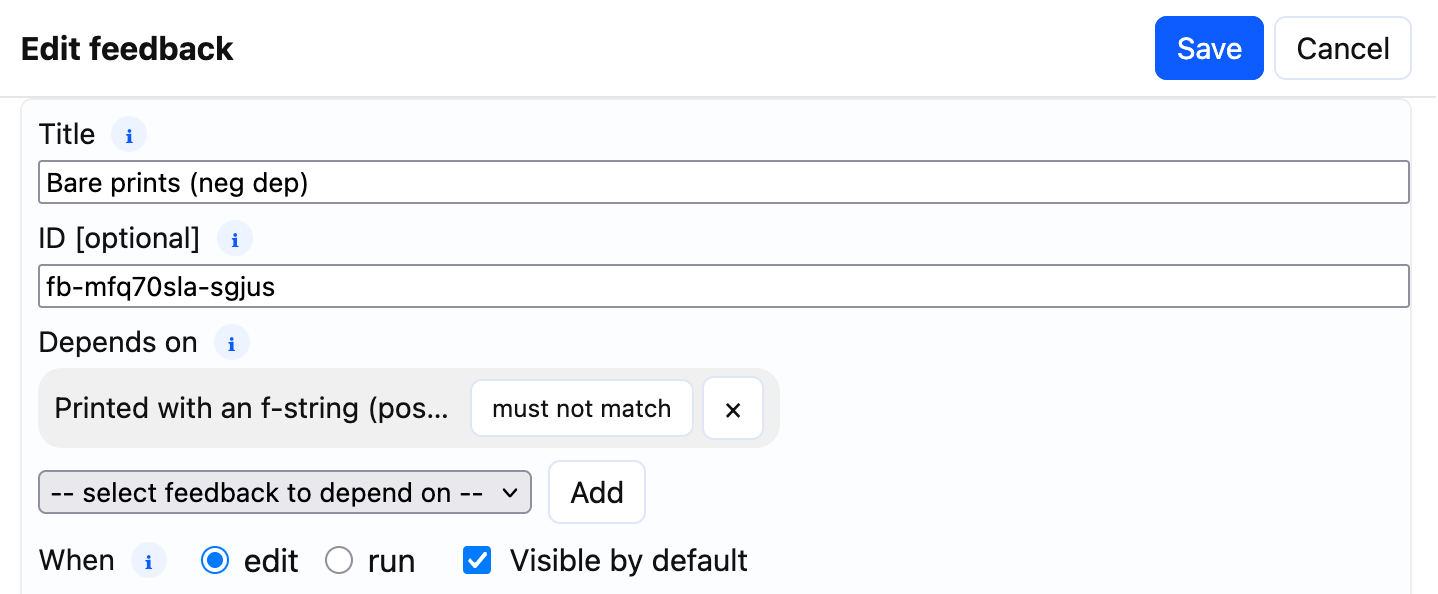

09_dependent_feedback_neg

09_dependent_feedback_neg

-

10_dependent_feedback_pos

10_dependent_feedback_pos

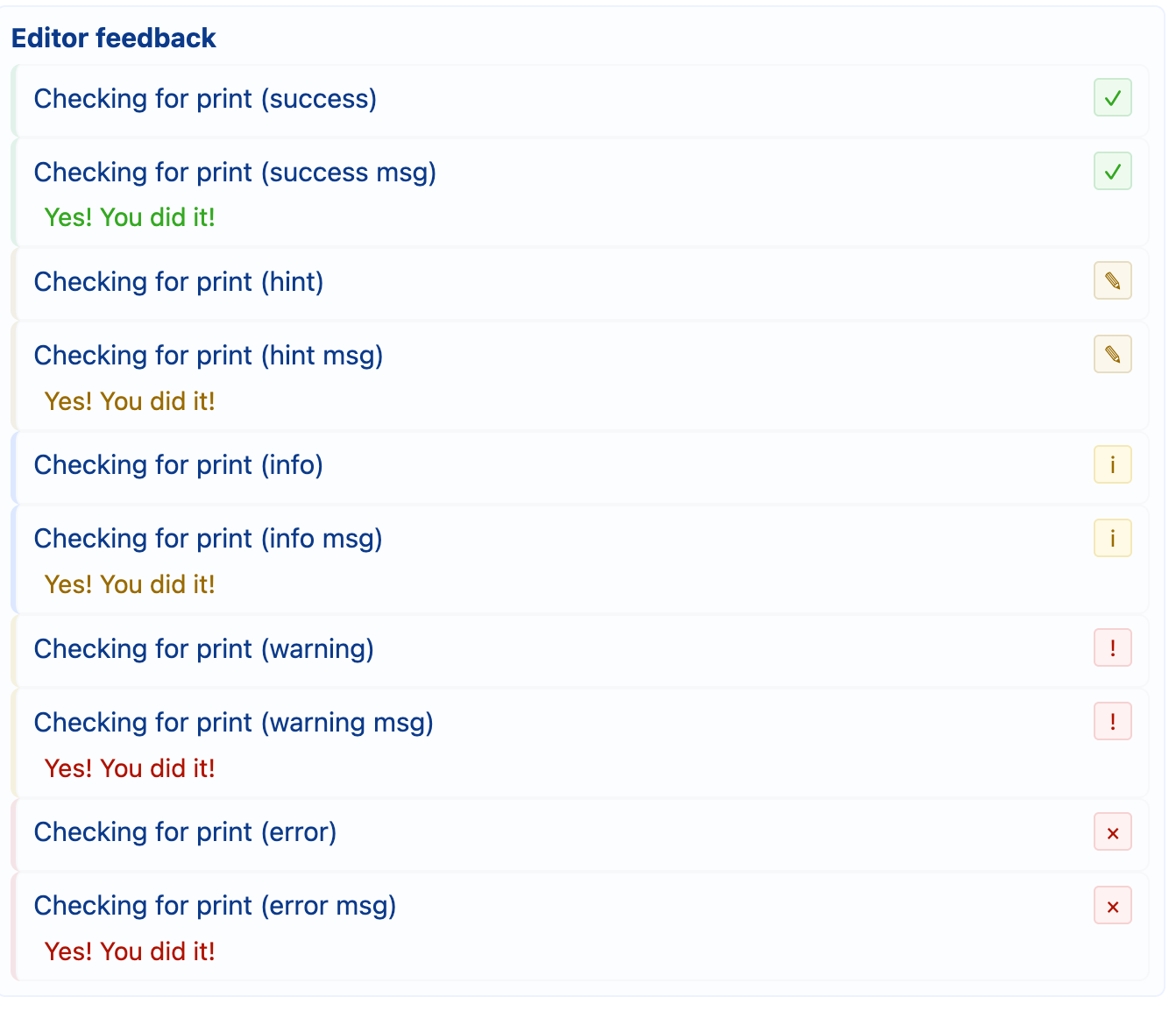

UI enhancement

I’ve been trying to make things generally better looking and nicer to use, given that I typically rock a pretty utilitarian aesthetic by default. Some of the changes are (you can see some of them in the screenshots):

- Shifting to non-emoji feedback indicators

- Feedback messages are now optional - the indicator and the title should do most of the job

- Run tests now visibly disabled when there are no tests to run

- Added an admonition extension to markdown rendering so authors can provide highlights to different types of content

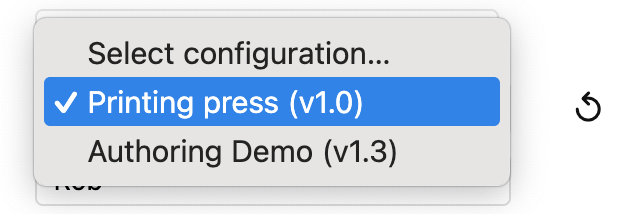

Problem lists

One of the things that bothered me about the initial design was the friction in getting multiple problems out to students. Each problem would have needed to have a new link with a URL parameter to load the next problem. Given that some problems might not take very long to solve, this would mean lots of bouncing back to a LMS page or email. However I still wanted to be able to have single problems loadable.

So now we support lists of problems (in a json file format) that can be passed to the app just

like individual problems. If the config file passed to the app ends up being a config list rather

than a single problem, the user is presented with a menu to navigate through the different problem

files found in the list (with its own problem set title in the page header). This way, we can still

respect the “client side only” nature of the app, but also make it possible to have a learning

sequence.

I have a config list containing simple test scripts that you can load here

There is also a basic config list that loads by default.

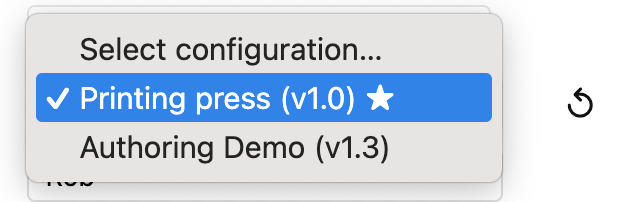

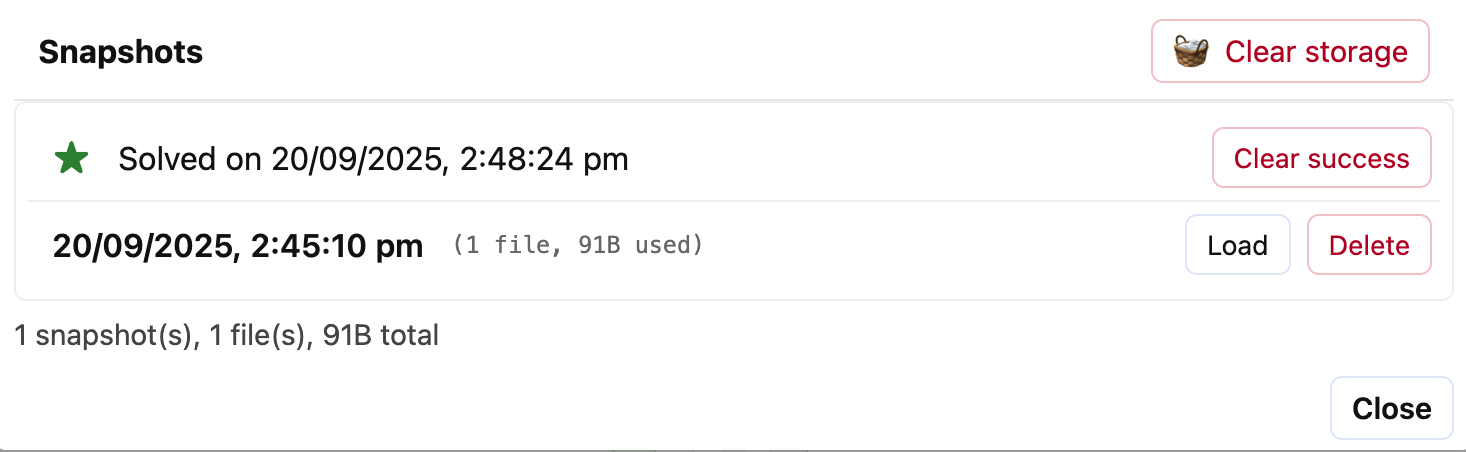

Persistent success indicators

The problem with potentially not just seeing a single problem anymore is it’s less likely to take just one session to solve them, so how do students keep track of their progress? Enter persistent success indicators in a few places:

- The page header now gets a success indicator if the student has passed the test suite for a single problem

- If a problem is part of a list, then we also badge problems with passing solutions in the drop-down menu for navigating between problems (this one was a struggle for the agent, and it broke things a few time 🫠)

- A ‘passing’ snapshot is created (or updated) each time a test suite is passed.

This gives students a few places where, if they pick up a problem set in another session, they can get a good visual indicator of whether or not they have passed a problem or not. The snapshot indicator also tells them when they passed the test suite last.

Feedback rule dependencies

I love feedback flexibility, and from personal experience it’s frustrating when the ability to give feedback is limited by the tools you have to provide it. One of the features I added to testing that made me pretty happy was conditional runs for tests and grouping.

This time I added some flexibility to when feedback rules trigger:

- Each feedback item can be dependent on a list of other feedback items to trigger

- Feedback dependency can be either “must match” or “must not match”

- Combining this with default hidden behaviour means you can surface “just in time” feedback means you can build some pretty flexible feedback types

(A)Introspection

This week has been a bit of messing around trying to spec out problems in a way that makes it clear what I don’t want to get broken, doing a whole lot of regression testing (much of it manual because I need to get around to putting together some decent e2e tests, and because there are a lot of round trips into browser storage more isolated tests aren’t always a great indication of whether something has worked, or rather, not broken anything).

One of the (funny|frustrating) things about agent instruction is the utter lack of self-awareness. There have been a couple of sessions where I’ve been working on a feature and it doesn’t work out the way I intended, or, after using the initial version a few times, I change my mind about how it should work. The agent then turns and tries to implement legacy migration features for something that hasn’t even been committed yet, let alone pushed. The minor funny version of this is it’ll write some code, I’ll test it, authorise the next step and it’ll tell me all about all the changes that it sees I’ve made, despite it having just written the code.