So there’s a certain element of hyperbole coming up. Just saying.

Google Allo (which of course makes me think of the classically cheesy ‘Allo ‘Allo) was outlined in the recent Google IO keynote. One of the features which bears some consideration is Smart Reply which suggests replies to messaging and learns from your responses over time (and I assume also does learning on the aggregate, playing to Google’s strengths in large scale data analysis.

Over at the Google blog they have this to say about Smart Reply

Smart Reply learns over time and will show suggestions that are in your style. For example, it will learn whether you’re more of a “haha” vs. “lol” kind of person. The more you use Allo the more “you” the suggestions will become. Smart Reply also works with photos, providing intelligent suggestions related to the content of the photo. If your friend sends you a photo of tacos, for example, you may see Smart Reply suggestions like “yummy” or “I love tacos.”

This sort of suggested reply scheme has existed for a while. Google Inbox has done this for a while. iOS has had suggested words above the keyboard for a while now as well (although I doubt anyone uses it in the predictive sense as opposed to suggestions once you start typing, except for entertainment purposes).

One of the things I worry about is the problem we already have with the idea of filter bubbles: The content which algorithms predict we will want is preferentially shown to us based on what we have viewed, liked, tweeted about and so on.

While the idea of filter bubbles is a bit more extreme than suggested replies, the concept is pretty much the same: an algorithm suggests ideas to us which, although some would be based on how we have reacted to similar content before, Google’s strength has always been analysis of aggregate data, so some aspects are always going to be taken from how other users have reacted and trained the system. As a result there will probably some homogeneity to the suggested context for the reply.

Assuming that Smart Reply gets good enough to actually use day to day, at what point do we as users start thinking of these replies as “good enough” and stop thinking about the nuance of the language we use? A parallel situation that I think already shows this sort of effect is the use of emoji in text messages, tweets and so on. We often use a “good enough” glyph in place of articulating our thoughts more thoroughly. (Full disclosure: I adore the shocked face emoji and use it at every opportunity 😱)

So what I wonder is, if we allow machine learning algorithms to suggest responses for us, do we reach the point where we actually stop thinking about the content we view as thoroughly as we might otherwise do (or perhaps as much as we should do)?

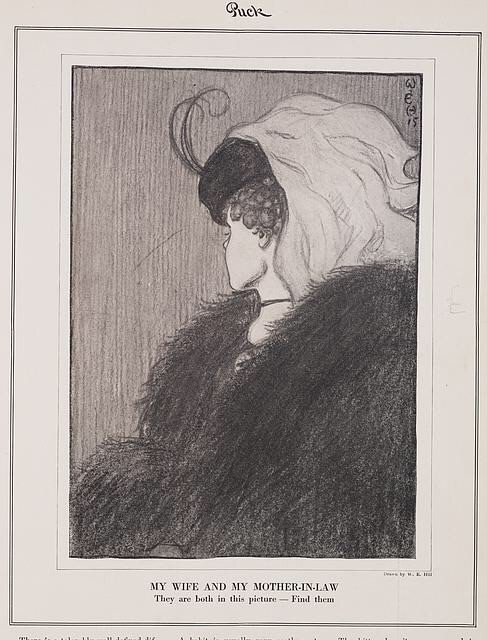

“My Wife and my Mother-In-Law” William Ely Hil

As a relatively unimportant example, let’s look at images which demonstrate perceptual ambiguity, such as the one which I’m sure everyone has seen, the old woman/young woman. In one case, we look at a picture and respond with that it’s a young woman and in another an old woman, or perhaps we think about it more and see both and comment on that. On the other hand, what if Google’s image analysis kicks in and suggests that it’s a photo of a young woman, do we accept this suggestion and not see the old woman?

It’s highly likely that Google’s software has already identified something like this and is ready to provide an accurate analysis of what the image is, so what about something that is more difficult to un-see once it has been viewed in a particular way? Whilst on holiday recently my wife and I were watching afternoon TV while waiting for the car to charge and a show on optical illusions came on. Many of the examples were tricks of perspective, but the one that stuck with me was the spinning dancer, which could appear to be spinning either clockwise or anticlockwise. With the right suggestion, it was possible to perceive it as spinning either way, but once we saw it it was quite difficult to switch to the opposite direction. In this instance my wife initially saw it as spinning anticlockwise and I saw it the other way. A simple suggestion can go a long way in the way this is perceived.

Spinning Dancer Nobuyuki Kayahara CC BY-SA 3.0

So anyway, while I’ve been listening and reading the opinions about chat bots everywhere (Facebook) and now Smart Reply (Google) and people and either insisting that this is The Future of eCommerce or some such, I’m left wondering whether they’ll have a subtle effect on our thinking when they get to the point of actually being reliable enough to be plausibly accurate.