In 2019 I started up a programming-oriented Data Science class with my Year 10s. I ran two classes during the year, each spanning a semester. My aim for the course was to introduce students to different ways of storing, retrieving, and working with data, as well as give some coverage over different types of data and some operations that you can perform with it (e.g. numeric, text, spatial).

I ran a different main project with each class: during the first semester I looked at analysing data from the Australian Federal Government Hansard. Unfortunately, the students in the group weren’t very interested in it, and (like many of my first time projects) the scope turned out to be overly broad, meaning students had trouble figuring out what they would do from all the alternatives of what they could do. Tangentially, working with the XML from the Hansard is a great (or terrible, depending on your perspective) activity in data cleaning - they’ve made some… interesting decisions about how to format their data inside the XML structure.

The second project was processing text to determine sentiment. This worked a lot better, and the project had a nice natural flow from the basic concepts of tokenisation and weighting, to some concepts like stop words, before throwing in some (large) real world data sets to test out our algorithm. It’s worthwhile noting here that it is very naive, and only gauges positive or negative sentiment, with no other categorisation like angry, sad, etc.

Progression of Sentiment Analysis

We start out by looking at some very basic examples and developing an algorithm. This might be some identification of simple tokens, or to start with just ordering the sentences from most positive to most negative and articulating what differentiates each sentence from its neighbours.

During the ranking process it becomes apparent that not all sentiment tokens are created equal, and so some sort of weighting system should come into play, meaning we can develop a scoring algorithm which we can apply to each sentence. I threw in a couple of ambiguous cases so that we also had to think about things like modifier words and symbols (e.g. ’not’ coming before a sentiment token, shouty caps, or exclamation marks).

This is where we can start bringing in how to tackle programming the algorithm and process the simple sentences to see how it fares and whether or not the results match our expectations discussed at the start of the project. This is as simple as initialising a score variable, splitting the sentence on spaces, and normalising by stripping punctuation and changing to a standard case (typically lower). We can then examine each word, checking for membership in our lists of sentiment tokens (in class we grouped them to v_bad, bad, good, and v_good with anything not being in these lists not contributing to the score.

This worked pretty well with the sample sentences, but it was obvious that it did not deal with modifiers at all, so “not good” was counted as a positive sentiment in the same way that “good” was. In the previous code snippet, the enumerate function was used to get the position of each word in the sentence along with the word itself, so that we could examine the preceding word as well. This had the added benefit of being able to check for amplifying words like “very” at the same time. Modifying or inverting was simply a case of adding to, subtracting from, or multiplying (e.g. x2 for a boost, or x -1 for an inversion) the score for the current word.

From here we looked at the importance of stop words. For example our modifier words were sometimes separated by stop words, such as in one of the examples “but not in a good way”. Filtering out any stop words prior to analysis and weighting made it possible to invert or amplify words which were not next to each other, but still related. This list of stop words we used can be found here: http://xpo6.com/list-of-english-stop-words/

score = 0

words = s.split()

words = [w for w in words if w.lower().strip(punctuation) not in stopwords]

for pos, word in enumerate(words):

mod = 1

if word.isupper():

mod += 0.5

word = word.lower().strip(punctuation)

if pos != 0:

if words[pos-1].lower().strip(punctuation) in mods:

mod += 0.25

if words[pos-1].lower().strip(punctuation) in inverters:

mod *= -1

if word in v_good:

score += 2 * mod

elif word in good:

score += 1 * mod

elif word in v_bad:

score -= 2 * mod

elif word in bad:

score -= 1 * mod

An extract of the code showing the implementation of the scoring algorithm.

Once we played with the values to get to the point where we were happy with the (somewhat arbitrary) scores, all that was left to do was try it out with some real world data.

The University of Pittsburgh Mult-Question Perspective Answering site has a few interesting sets of data for download, and we grabbed a copy of the Subjectivity Lexicon (which is available for free, but you need to supply contact details), and split it up for use in our program. This gave use a huge list of words, along with a strong or weak associate with positive or negative use (and more practice splitting strings in Python :).

type=strongsubj len=1 word1=abomination pos1=noun stemmed1=n priorpolarity=negative

type=weaksubj len=1 word1=above pos1=anypos stemmed1=n priorpolarity=positive

type=weaksubj len=1 word1=above-average pos1=adj stemmed1=n priorpolarity=positive

type=weaksubj len=1 word1=abound pos1=verb stemmed1=y priorpolarity=positive

type=weaksubj len=1 word1=abrade pos1=verb stemmed1=y priorpolarity=negative

type=strongsubj len=1 word1=abrasive pos1=adj stemmed1=n priorpolarity=negative

A sample of the University of Pittsburgh data. We only used the type, word1, and priorpolarity key value pairs.

Lastly we needed some real world data which we could use to compare our results, and to stop using our toy data set of contrived sentences. Since the initial premise for the program was analysis of product reviews, what better place to look than Amazon. I found a set of Amazon reviews that had been collected for analysis by Jianmo Ni from the University of California San Diego. Unlike the previous sets of data, this was nicely formatted as JSON, and there was a large range of different review categories, all of which were suitably huge (definitely from a student perspective, used to dealing with tens of data points). As the smallest set was more than enough for our purposes, I chose the Amazon Instant Video category, weighing in at a mere 37126 entries.

{"reviewerID": "A11N155CW1UV02", "asin": "B000H00VBQ", "reviewerName": "AdrianaM", "helpful": [0, 0], "reviewText": "I had big expectations because I love English TV, in particular Investigative and detective stuff but this guy is really boring. It didn't appeal to me at all.", "overall": 2.0, "summary": "A little bit boring for me", "unixReviewTime": 1399075200, "reviewTime": "05 3, 2014"}

{"reviewerID": "A3BC8O2KCL29V2", "asin": "B000H00VBQ", "reviewerName": "Carol T", "helpful": [0, 0], "reviewText": "I highly recommend this series. It is a must for anyone who is yearning to watch \"grown up\" television. Complex characters and plots to keep one totally involved. Thank you Amazin Prime.", "overall": 5.0, "summary": "Excellent Grown Up TV", "unixReviewTime": 1346630400, "reviewTime": "09 3, 2012"}

{"reviewerID": "A60D5HQFOTSOM", "asin": "B000H00VBQ", "reviewerName": "Daniel Cooper \"dancoopermedia\"", "helpful": [0, 1], "reviewText": "This one is a real snoozer. Don't believe anything you read or hear, it's awful. I had no idea what the title means. Neither will you.", "overall": 1.0, "summary": "Way too boring for me", "unixReviewTime": 1381881600, "reviewTime": "10 16, 2013"}

A sample of the Amazon Instant Video data. “overall” gives the star rating, and we also looked at the “reviewText” field. It would be interesting to see how useful the “helpful” data was in the future too.

Working with JSON let us look at working with libraries (although we had done some work with CSV prior to this) and the dictionary data structure.

One of the great things about this data set, is that whilst we could analyse the review text and see how our algorithm scored each one, each review also contained the star rating given by the reviewer, which meant that we could compare the implicit review sentiment based on the text to the explicit review rating and use this as a method for determining either the honesty of the review, or as a metric for evaluating the accuracy of our algorithm.

We ended up running out of time to do the final analysis, but I’ll manage our time better next time I run with this project. I put together a quick program of the work we did in class comparing the extremes of sentiment scores to star ratings, which turned out to be 73% accurate. Not too bad for a toy program that simplifies the problem quite a lot!

What worked and what didn’t

As far as engagement goes, I think this worked really well - students followed the flow most of the way and I think the complexity of the main ideas were about right. Splitting, scoring, stop words, iterating through a list of words: all of these worked out fine.

Where things tended to fall apart a bit is when we got to importing the larger files. Even though the sentiment words and the JSON reviews were quite simple as individual entries, somehow the idea of processing tens of thousands of them was a bit intimidating.

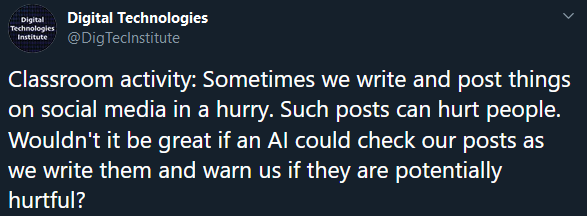

I was originally going to do an activity looking at identifying online chat behaviour, being inspired by this tweet from Karsten Schulz (although taking a different approach). I ended up being put off by the difficulty of finding good sample data to work with that was real world but fairly tame. I might have another crack at it later on and maybe incorporate it into an IRC bot or something similar.

Some final code

Although we ran out of time in class, I put together a bit of code which compares Amazon review star ratings with calculated sentiment and tries to figure out how accurate the simple scoring algorithm is. It isn’t quite finished, but I doubt that will change for a while.

from string import punctuation

from stopwords import stops

from math import floor

from json import loads

sentiment_words = {} # word: strength, +ve -ve numbers for polarity

with open("subjclues.txt") as f:

line = f.readline().strip("\n")

while line:

pairs = line.split(" ")

# 0, 2, -1

polarity_type = pairs[0].split("=")[1]

word = pairs[2].split("=")[1]

strength = pairs[-1].split("=")[1]

mod = 1

if strength == "negative":

mod *= -1

if polarity_type == "strongsubj":

mod *= 2

sentiment_words[word] = mod

line = f.readline().strip("\n")

# customise good/bad for context

def add_word(word, weight):

if word not in sentiment_words:

sentiment_words[word] = weight

else:

print(f"Warning: {word} is already in word list with weighting {sentiment_words[word]}.")

def remove_word(word):

if word in sentiment_words:

del sentiment_words[word]

else:

print(f"Warning: {word} not found in word list.")

# e.g. 'buy' is a good word for product reviews

add_word("buy", 1)

remove_word("really")

remove_word("incredibly")

remove_word("very")

remove_word("just")

remove_word("super")

mods = ["really", "incredibly", "very", "super"]

inverters = ["not", "isn't"]

def analyse(s):

score = 0

# break the sentence up into a list of individual words

words = s.split()

#print(f"Analysing: {s}")

# filter out stop words by recreating the list only adding words

# which are not in the stop word list

# this list comes from http://xpo6.com/list-of-english-stop-words/

words = [w for w in words if w.lower().strip(punctuation) not in stops]

# enumerate gives us position, value pairs for lists

# pos will store

for pos, word in enumerate(words):

# This changes the weighting of a sentiment word

mod = 1

if word.isupper():

mod += 0.5

word = word.lower().strip(punctuation)

# check if the previous word is a modifier word

# this should really pay attention to things like sentence endings, but we'll

# leave that for a later version

if pos != 0: # not the very first word, since that has no previous word

prev = words[pos-1].lower().strip(punctuation)

if prev in mods:

mod += 0.25

elif prev in inverters:

mod *= -1

if word in sentiment_words:

score += sentiment_words[word] * mod

#print(f"\t{word} - {sentiment_words[word]} * {mod}")

# Global sentence modifiers. These change the weighting of the whole

# sentence, not just the individual words.

if s[-1] == "!":

score *= 1.5

#print("\tExclamation! - * 1.5")

if s.isupper():

score *= 1.25

#print("\tShouty CAPS - * 1.25")

#print(f"\tScore: {score}")

return score

with open("Amazon_Instant_Video_5.json") as f:

line = f.readline()

matches = 0

total = 0

# collect and graph sentiment scores

scores = []

while line:

review = loads(line)

score = analyse(review["reviewText"])

scores.append(score)

overall = review["overall"]

# check if negative sentiment matches poor overall (1-2 -ve, 3 neutral, 4-5 +ve)

if (score < 0 and overall < 3) or (score > 0 and overall > 3):

matches += 1

elif score == 0 and overall == 3:

matches += 1

else:

pass

#print(f"Mismatch score {score} vs rating {overall}")

total += 1

line = f.readline()

print(f"\nSummary:\n\tTotal reviews: {total}\n\tTotal matches: {matches}\n\tMatch %: {int(matches / total * 100)}")

print(f"\nScore max: {max(scores)}\nScore min: {min(scores)}")

score_range = max(scores) - min(scores)

increments = int(score_range / 20) # something arbitrary for histogram buckets

buckets = [0]*20

for score in scores:

bucket = floor(score/increments)

buckets[bucket] += 1

mins = int(min(scores))

for i, bucket in enumerate(buckets):

perc = int(bucket / total * 100) / 2

b = i * increments + mins

print(f"{b:5} to {b + mins - 1:5} " + "*" * int(perc))